Read this article if you want to learn about the principles of clustering for a single-tier J2EE application. I know that clustering is a commonly found pattern in today’s IT world. I nevertheless wanted to cover it for those with less experience in a short entry as it is an essential pattern to know.

I outlined the objective, variations and commonly found patterns. After reading this article you should be able to distinguish between clustering and availability, know the advantages and disadvantages of clustering and be able to recognize the infrastructure impact a clustered application has.

1. Objective

Suitable deployment architecture is developed based on balancing non-functional requirements and constraints. This entry outlines the design rationale behind building a cluster with single-node deployment configurations, their advantages, disadvantages and underlying design decisions.

This pattern describes an Architecture that consists of a HTTP Server, Application Server and Database Server. The Application Server contains a HTTP-Container and EJB-Container. The particularity about this Architecture is that the HTTP Server and Application Server are deployed on one physical node (i.e. physical server).

While this pattern can be used as one aspect of addressing scalability in a Software Architecture it is not all that is to scalability. This is why I specifically reduced it to “clustering” in the title.

I specifically named it a pattern for network-centric applications as this pattern is frequently found in Web applications, but is as applicable for any network-centric application that offers services to a network. Be it now for end-users or as a business-to-business platform.

The conceptual ideas of this pattern may be applied to other technological environments but the pattern itself is geared towards J2EE based Architectures.

2. Pattern

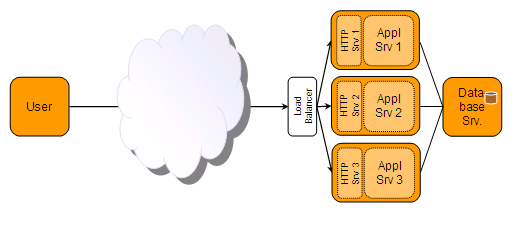

Figure 1 – Clustered Single-Node deployment configuration

3. Design Rationale

Figure 1 shows a clustered single-node configuration for a network-centric J2EE application. A cluster is, simply put, a collection of nodes that – together – comprise the entire application.

The figure represents a typical single-tier server application as outlined in a previous article. Repeating it briefly, we have:

- A client tier, represented as node “User”,

- Three server nodes (application tier) with a HTTP Server and Application Server on each node,

- A load balancer, and

- A database server.

New in this configuration is that the application tier has been replicated onto three nodes, that a load balancer has been added and that the database server is shared by all server nodes.

Users are located on the network – Intranet or Internet – and request are routed to the application’s load balancer, which distributes them to a particular application tier node based on particular algorithm.

To the client – however – all nodes in the cluster appear to be one logical application. Which responsibility a particular node in a cluster has is up to the designer or the application. They can all have identical responsibilities or have disparate responsibilities.

A common reason for applying clustering is scalability. Clustering is not the only means of scaling an application but an important one. Clustering makes it possible to add additional server nodes if the capacity of the configuration needs to be increased.

Scalability is the ability of being able to increase the capacity of an application by adding additional application servers or nodes. What types of scalability there are and how those are characterized will be explained in a different article.

An additional benefit clustering provides is increased availability. This, however, depends on the design of the Architecture. Does each node have disparate responsibilities a cluster will not increase the availability. Scalability and availability must – in general – not overlap and should therefore not be confused.

Clusters in a web-centric environment are typically built with so-called load balancers. A load balancer is an additional node – often a hardware device – that distributes incoming requests to the individual nodes based on a particular algorithm. For example a “round robin” algorithm that distributes incoming requests evenly across all nodes. This means the first request goes to server 1, the second to server 2, the third to server 3, the fourth to server 1, etc.

Often there are more sophisticated algorithms which may also take the current load of the participating nodes, their availability and other factors into account. For example, a load balancer only forwards a request to the least utilized node.

From time to time you may hear a term such as “sticky”. Load balancers can be configured “sticky”. This means that once a request for a particular client has been forwarded to a particular node all future requests of that same client will be forwarded to the same node. This can have design reasons, but more often than not it is used as a work-around to design problems.

While there are other important aspects to the design of clustered applications most importantly there are two rules:

- The application does not have any state at all.

- If there is a state, it is present on all nodes in the cluster.

Why is this so important? If incoming requests are randomly distributed to one of the nodes in the cluster, the processing node must have all knowledge to process that request.

This can be accomplished if the application tier does not have a state, i.e. the node has all the information required to process a request on its own or receives it with the request.

Alternatively, if the server requires additional information, that this information – called the state – is present on all nodes in the cluster. If all nodes in the cluster share this state the load balancer can distribute incoming requests to any node in the cluster. If, for some reason, a particular request builds a state on a particular node that it requires for processing future requests the load balancer must be configured sticky so that later requests are forwarded to the node that possesses the state.

A typical example for a state that needs to be shared among nodes in web-centric applications is the HTTP session. If a created session is distributed to all nodes in the cluster once it has been created the load balancer can freely distribute request to any node in the cluster. If it is not distributed, the load balancer needs to be configured sticky so that future request of that same user are forwarded to the node that hosts the session.

Sharing states can be accomplished with different technologies, such as databases – if it needs to be transaction-save – or caches and memory to memory replication – if it needs to be very fast.

A clustered single-node configuration of this kind has some advantages as well as disadvantages which are outlined in the table below based on the important non-functional criteria:

A very important recommendation is that – for business applications – you do not attempt to develop clustering infrastructure yourself. The complexity and the effort for development is too high and the benefits are too low than to be profitable for a project of that kind.

| Non-Functional Requirement | Advantages | Disadvantages |

| Performance and Scalability | Clustering is a form of horizontal scaling and adds to the performance and scalability of anode. | More care needs to be taken (i.e. effort in design, development and testing) with the design of the application:

Clusters of single-tier applications are less suitable for high static HTTP loads. If you have those it is better to apply a multi-tier pattern and a static HTTP tier. |

| Availability | Provides availability of all nodes in the cluster provide the same service or if there is more than one node per type of service. | Limited availability benefits of nodes in cluster host disparate services or if state is not shared among nodes. |

| Security | There are no particular advantages or disadvantages from a mere clustering standpoint. | There are no particular advantages or disadvantages from a mere clustering standpoint. |

| Maintainability and Operations | From a clustering standpoint it is the simplest form of configuration. In particular, if all nodes in the cluster provide an identical service. This would make it possible to replicate nodes by cloning. | It is more complex and requires more effort to build and maintain clustered applications and operate clustered infrastructures. |

| Cost of Ownership | See maintainability and operations. | More hardware, software and administrative resources required than for single-node installations. |

4. Variations

This pattern describes clustering on the application-server tier. Please note that clustering can principally be employed in every layer or tier of a software application built. As long as the design is open enough to make clustering possible.

Please note as well that the needed degree of clustering very much depends on the non-functional requirements and the cost/benefit ratio for the sponsor. Take clustering to a necessary level of complexity but not any further. Because clustering will not just increase the application’s throughput but also the complexity and therefore cost of infrastructure, development, maintenance and operations.

From an infrastructure and operational architecture perspective clustering can principally be mixed with most other patterns. There is principally no limitation. I say “principally” because it all depends on the specific software architecture and design features.

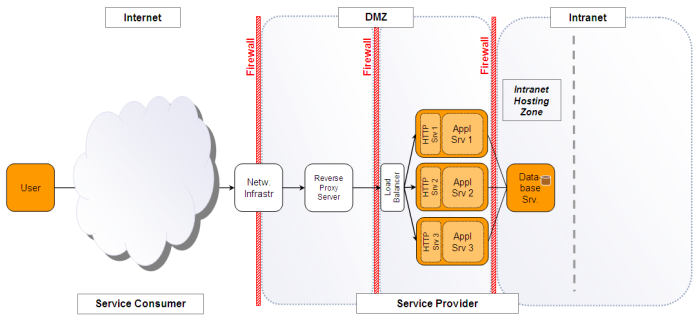

Generally speaking, clustering can be used in Intranet settings as well as deployments for users on the Internet. The figure below shows a sample deployment architecture for an Internet deployment in the second DMZ zone.

Please see the related patterns on how a clustered configuration can potentially get deployed in an enhanced configuration. More details on clustering multi-tier applications will follow in a different post.

5. Related Patterns

- Single Node Deployment Configuration for Multi-Tier Network-Centric Software Applications

- Multi Node Deployment Configuration for Multi-Tier Network-Centric Software Applications

- A more complex Network Diagram (example)

The links to those patterns can be found in the section References.

References

[Coh2010] Cohen, D. 2010, “What do you mean by “Performance”?”, Persistent Storage for a Random Thought Generator [online] available at http://dannycohen.info/2010/04/02/what-do-you-mean-by-performance/

[Pic2010a] Pichler, M. 2010, “Architectural Pattern: Multi Node Deployment Configuration for Multi-Tier Network-Centric Software Applications”, A Practical Guide to Software Architecture [online] available at https://applicationarchitecture.wordpress.com/

[Pic2010b] Pichler, M. 2010, “Diagram: A more complex Network Diagram (example)”, A Practical Guide to Software Architecture [online] available at https://applicationarchitecture.wordpress.com/

[Pic2010] Pichler, M. 2010, “Diagram: The Network Diagram”, A Practical Guide to Software Architecture [online] available at https://applicationarchitecture.wordpress.com/

[Pic2010] Pichler, M. 2010, “Architectural Pattern: Single Node Deployment Configuration for Multi-Tier Network-Centric Software Applications”, A Practical Guide to Software Architecture [online] available at https://applicationarchitecture.wordpress.com/

Copyright © 2010 Michael Pichler